Project Type: Autonomous + Voice Controlled Raspberry Pi Robot

Use Case: Obstacle Avoidance, Voice Interaction, Image Understanding, Mobile/Web/Bluetooth Control

Tagline: “Building the Bots of Tomorrow.”

Key Features

Voice Control

- Robot listens for commands like:

forward,backward,left,right,stopscan→ scans surroundings with servo-mounted ultrasonic sensorphoto→ captures an image and describes it in Hindi or Any other local languageauto/manual→ switch modesgame→ plays Rock-Paper-Scissors with the user

Laptop runs AI backend using Whisper (voice-to-text) and LLaVA or LLaMA3 (for understanding commands or describing images).

AI Image Analysis (LLaVA or similar model)

- Robot captures image → sends to Flask API on laptop

- API uses Ollama + Vision model (like LLaVA or Gemma) to describe the scene

- Robot speaks the result in Hindi or any other local language

Autonomous Obstacle Avoidance

Ultrasonic Sensoron aServo motorscans left/center/right- Robot chooses the clearest path and moves

- Captures image of obstacle and describes it aloud

- Can be toggled with voice or a physical button

Web Control Panel

- Flask web server on Pi with a simple control UI

- Buttons: Forward, Backward, Left, Right, Stop, Scan, Dance, Auto, Manual, Photo

- Mobile-friendly interface for easy control

Mobile / Bluetooth Control (Coming Soon or Optional)

- Option to add Bluetooth controller (HC-05 or phone)

- Can control basic movements via joystick or Bluetooth terminal app

Fun Features

robo_dance()– a predefined dance sequencerock_paper_scissors()– simple game using voice prompts

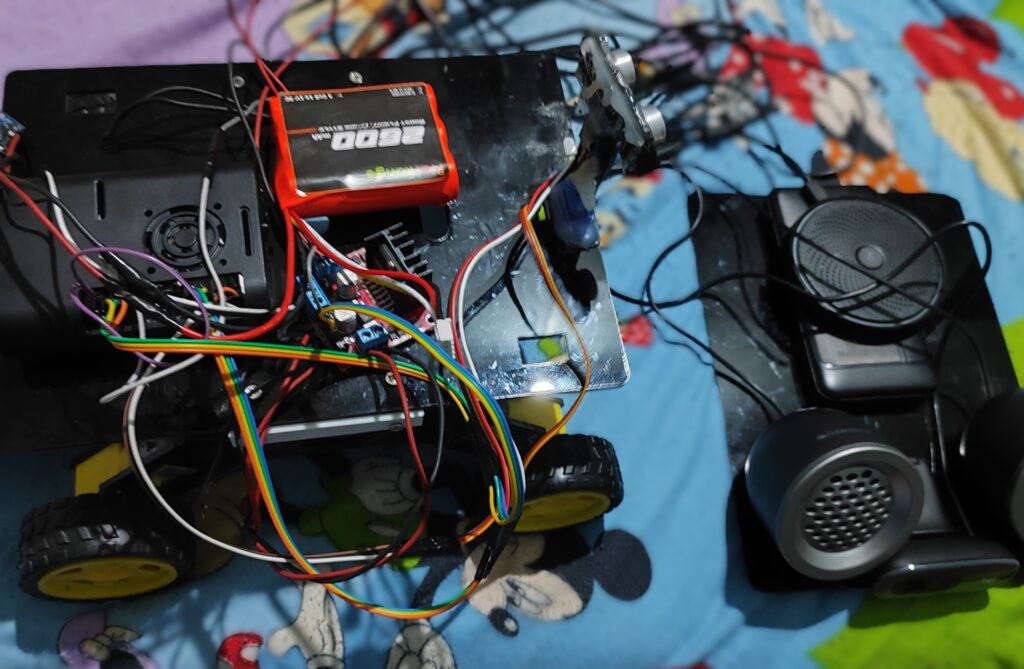

Hardware Components

| Component | Description |

|---|---|

| Raspberry Pi 5 | Main brain (runs Flask, motor control, etc.) |

| Ultrasonic Sensor (HC-SR04) | For obstacle detection |

| Servo Motor | Rotates the ultrasonic sensor for scanning |

| 4x DC Gear Motors | For movement (connected via L298N) |

| L298N Motor Driver | Controls the motors |

| Pi Camera 2 | Takes photos for AI processing |

| Speaker | For voice output (any local language TTS) |

| Button | To toggle auto/manual mode |

| Power Supply | Battery or power bank |

| Wi-Fi | For communication with laptop |

| Laptop | Runs Ollama models and Flask APIs |

Software Architecture

plaintextCopyEdit ┌────────────────────┐

│ Voice Input │

│ (Hey Robo, etc.) │

└────────┬───────────┘

↓

┌─────────────────────────────┐

│ Laptop with Whisper + AI │

│ Flask API (Text/Image) │

└──────┬──────────────┬───────┘

↓ ↓

Voice command Image caption

(e.g., "forward") (e.g., "यह एक दरवाजा है।")

↓ ↓

┌─────────────────────────────┐

│ Raspberry Pi (Robot) │

│ Flask server + GPIO Logic │

└─────────────────────────────┘

Technologies Used

- Python (Flask, GPIO Zero, threading)

- Spring Boot (Java backend on laptop)

- Ollama + LLaVA / LLaMA3 / Gemma

- Whisper (voice to text)

- Google Translate / gTTS (Hindi TTS)

- HTML/CSS/JS for control panel

Raspberry Pi setup Code

gpiozero,picamera2,pygame,speech_recognition,requests,gtts,pydub,flaskarecordfor audio recordingffmpegfor audio conversion (MP3 to WAV if needed)

import os

import requests

import time

from gpiozero import Motor, DistanceSensor, AngularServo

from picamera2 import Picamera2

from gtts import gTTS

import pygame

import speech_recognition as sr

# --- GPIO Setup ---

motor_left = Motor(forward=5, backward=6)

motor_right = Motor(forward=13, backward=19)

ultrasonic = DistanceSensor(echo=27, trigger=17)

servo = AngularServo(18, min_angle=0, max_angle=180)

# --- Camera ---

picam = Picamera2()

picam.configure(picam.create_still_configuration())

# --- AI Backend ---

VOICE_API = "http://192.168.215.29:8080/api/ai/voice"

IMAGE_API = "http://192.168.215.29:8080/api/ai/image"

# --- Global State ---

automatic_mode = False

# --- Movement Functions ---

def move_forward(t=1):

motor_left.forward()

motor_right.forward()

time.sleep(t)

stop()

def move_backward(t=1):

motor_left.backward()

motor_right.backward()

time.sleep(t)

stop()

def turn_left():

motor_left.backward()

motor_right.forward()

time.sleep(0.5)

stop()

def turn_right():

motor_left.forward()

motor_right.backward()

time.sleep(0.5)

stop()

def stop():

motor_left.stop()

motor_right.stop()

# --- Image Functions ---

def capture_image():

filename = "/tmp/obstacle.jpg"

picam.start()

time.sleep(1)

picam.capture_file(filename)

picam.stop()

return filename

def send_image_to_laptop(filepath):

with open(filepath, "rb") as f:

files = {"image": f}

res = requests.post(IMAGE_API, files=files)

return res.json().get("caption", "No description found")

# --- Speech Functions ---

def speak(text, lang="en"):

tts = gTTS(text, lang=lang)

filename = "/tmp/speak.mp3"

tts.save(filename)

pygame.mixer.init()

pygame.mixer.music.load(filename)

pygame.mixer.music.play()

while pygame.mixer.music.get_busy():

continue

# --- Sensor Functions ---

def scan_surroundings():

angles = [0, 45, 90, 135, 180]

for angle in angles:

servo.angle = angle

time.sleep(0.3)

dist = ultrasonic.distance * 100

print(f"Angle {angle}°: {dist:.2f} cm")

servo.angle = 90

# --- Autonomous Mode Loop ---

def auto_mode_loop():

global automatic_mode

servo.angle = 90

while automatic_mode:

dist = ultrasonic.distance * 100

print(f"Distance: {dist:.2f} cm")

if dist < 25:

stop()

image = capture_image()

caption = send_image_to_laptop(image)

speak(f"Stop! Obstacle ahead: {caption}")

turn_left()

else:

move_forward(0.5)

time.sleep(0.1)

# --- Voice Recording & AI Interaction ---

def record_and_send_voice():

os.system("arecord -D plughw:1,0 -f cd -t wav -d 4 -r 16000 /tmp/voice.wav")

rec = sr.Recognizer()

with sr.AudioFile("/tmp/voice.wav") as source:

audio = rec.record(source)

try:

text = rec.recognize_google(audio, language="en-US")

print(f"Recognized: {text}")

res = requests.post(VOICE_API, json={"text": text})

reply = res.json().get("reply", "")

print(f"AI says: {reply}")

speak(reply, lang="en")

handle_ai_command(reply)

except Exception as e:

print("Error:", e)

speak("Sorry, I didn't understand.")

# --- Command Handling ---

def handle_ai_command(command):

global automatic_mode

if "forward" in command:

move_forward()

elif "backward" in command:

move_backward()

elif "left" in command:

turn_left()

elif "right" in command:

turn_right()

elif "stop" in command:

stop()

elif "scan" in command:

scan_surroundings()

elif "auto" in command:

automatic_mode = True

auto_mode_loop()

elif "manual" in command:

automatic_mode = False

stop()

# --- Extras ---

def robo_dance():

for _ in range(2):

turn_left()

turn_right()

speak("I'm dancing!", lang="en")

def play_rock_paper_scissors():

speak("Rock, paper, or scissors! I choose: paper", lang="en")

Laptop setup Code

Project Structure

ai_backend/

├── main.py

├── voice_processor.py

├── image_processor.py

├── requirements.txt

└── static/

Flask App

requirements.txt

flask

requests

gtts

pydubmain.py

from flask import Flask, request, jsonify

from voice_processor import handle_voice_command

from image_processor import handle_image_caption

app = Flask(__name__)

@app.route("/api/ai/voice", methods=["POST"])

def voice():

text = request.json.get("text")

result = handle_voice_command(text)

return jsonify({"reply": result})

@app.route("/api/ai/image", methods=["POST"])

def image():

file = request.files.get("image")

if not file:

return jsonify({"error": "No image uploaded"}), 400

caption = handle_image_caption(file)

return jsonify({"caption": caption})

if __name__ == "__main__":

app.run(host="0.0.0.0", port=8080)

voice_processor.py

import requests

OLLAMA_URL = "http://localhost:11434/api/generate"

def handle_voice_command(text):

prompt = f"You are a robot controller. Respond to this command: '{text}'"

payload = {

"model": "borch/llama3_speed_chat",

"prompt": prompt,

"stream": False

}

res = requests.post(OLLAMA_URL, json=payload)

return res.json().get("response", "").strip()

image_processor.py

import requests

import base64

from gtts import gTTS

import os

OLLAMA_URL = "http://localhost:11434/api/generate"

def handle_image_caption(file):

image_data = base64.b64encode(file.read()).decode("utf-8")

prompt = "Describe this image."

payload = {

"model": "llava",

"prompt": prompt,

"images": [image_data],

"stream": False

}

res = requests.post(OLLAMA_URL, json=payload)

hindi_caption = res.json().get("response", "").strip()

# Speak it

tts = gTTS(hindi_caption, lang="hi")

filename = "/tmp/output.mp3"

tts.save(filename)

os.system(f"mpg123 {filename} &") # Or another player

return caption

Future Enhancements

- Add GPS for location tracking

- Add camera streaming to web panel

- Add lidar or IR sensors for better navigation

- Add NLP model on Pi for local processing

- Offline Hindi TTS for better reliability

- Bluetooth/Joystick support

Leave a Reply